Harnessing AI for audiences at the Washington Post

The man in charge of the Washington Post's tech platform thinks Ai can create the right user experiences to attract young people

Matthew Monahan, president Arc XP

Monahan thinks we have plenty of challenges ahead. We’re not seeing young people becoming direct customers as they age, as we used to be able to rely on. And we’re not seeing AI sending significant traffic. Our entire future looks perilous.

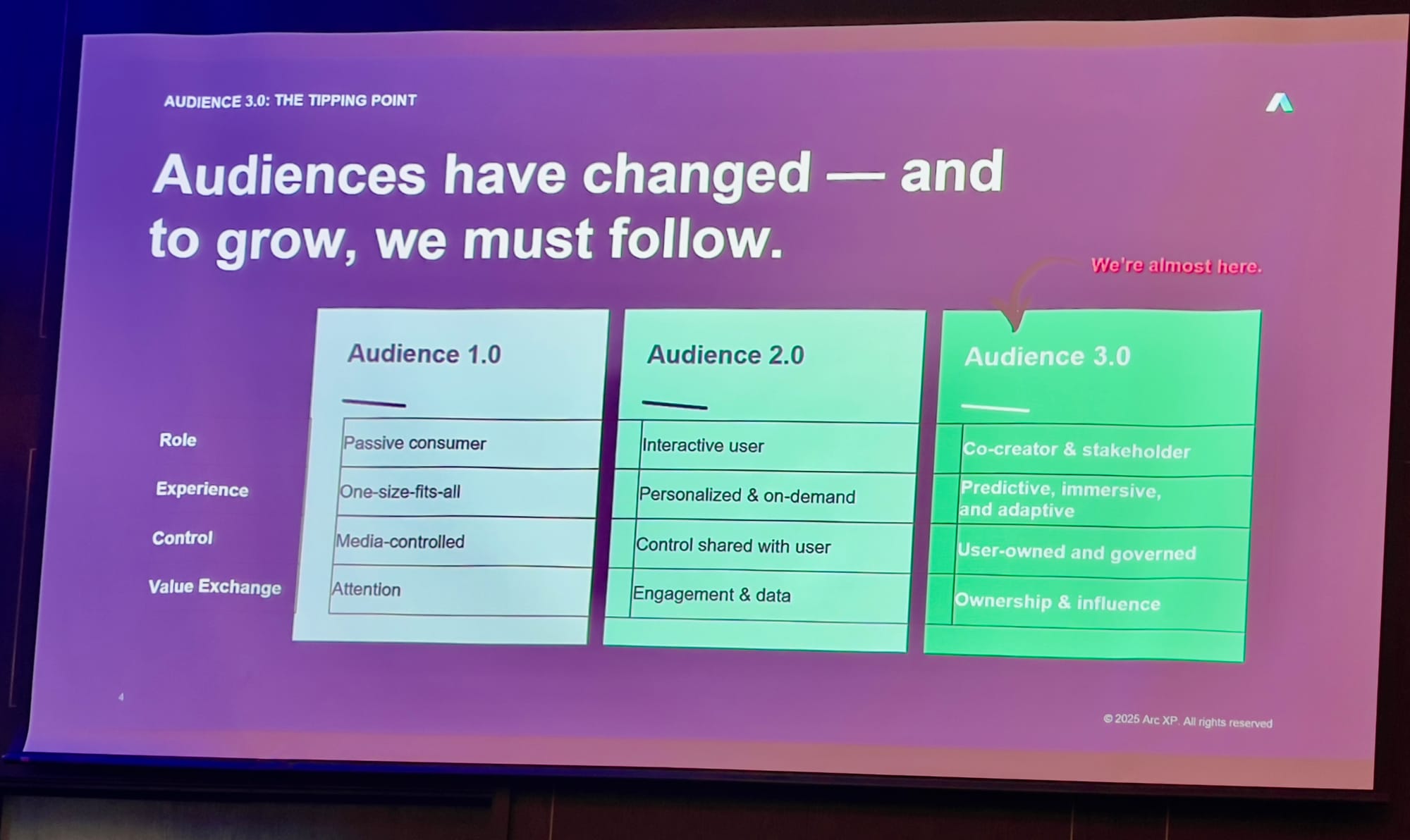

So, what do young people want? The answer seems clear: multimedia experiences, interactive experiences. There’s a disconnect between our existing user experiences and what audiences actually expect. In fact, many newsrooms are stuck at what he calls the Audience 1.0 view of the audiences — but we’re about to hit the Audience 3.0 world, and it’s a big leap to hit that world.

Here’s how he breaks that down:

Using AI to give audiences what they want

AI isn’t the competition, he argues, it’s companies using AI strategically to help them accelerate. Can you use AI to empower and amplify journalists, rather than replacing them?

If you look across the industry, most AI is being used for internal functions, rather than in creating new audience experiences. Can AI unlock new interactive audience experiences? The Washington Post is exploring that, using their tech platform.

The key is to build something that you can be confident in the results from, he suggests. In other words, something which minimises or eliminates hallucinations. They’re using Retrieval Augmented Generation (RAG) to do that, based on the LLM referring back to their own content. Language Models are a good way to understand what audiences want, and give them responses — but there are other ways of pulling their information from the archives. This is the heart of the RAG approach — which seems to be an LLM interaction layer on top of more traditional information retrieval. Use the LLM to understand the question, to format the response, but use other tech to create it.

Fascinating.

The Incomplete Experience of Chatbots

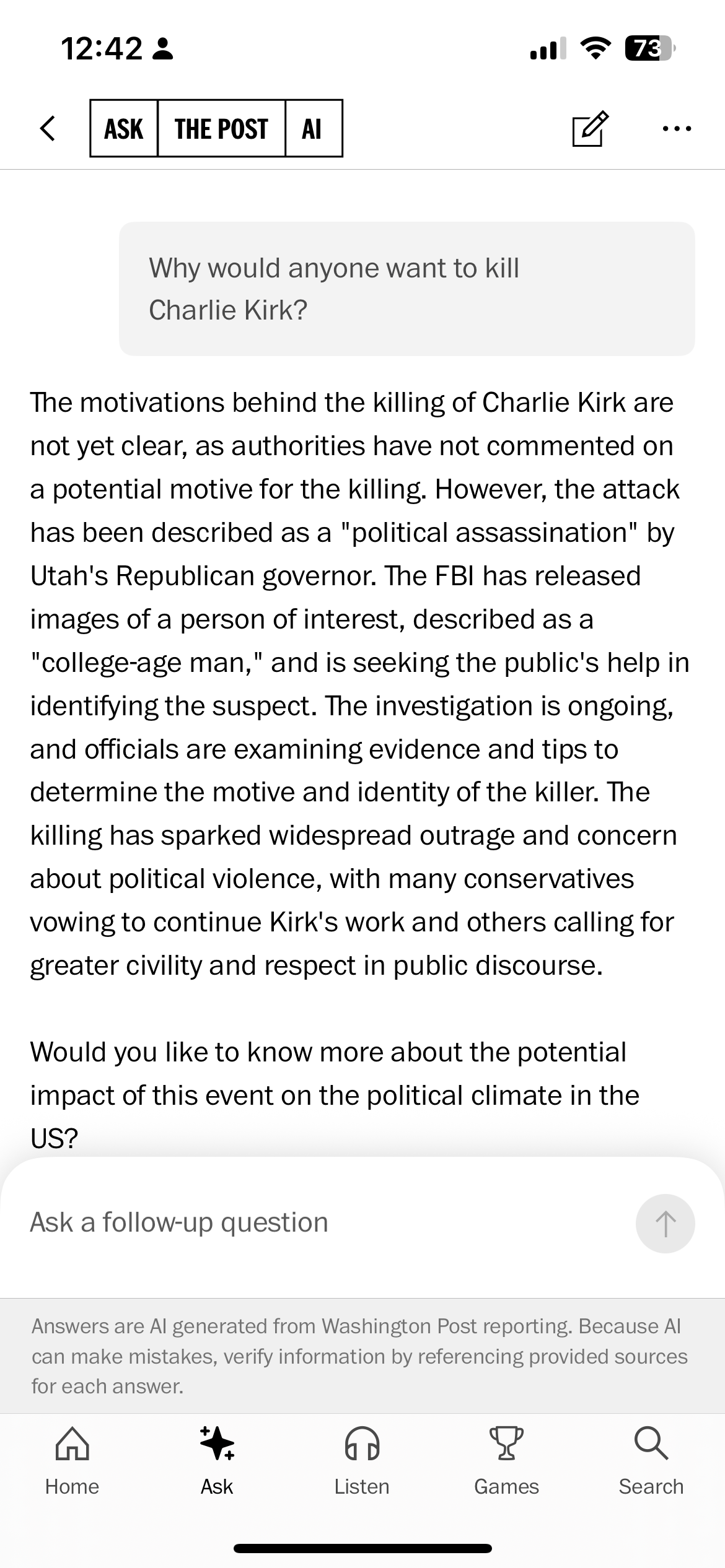

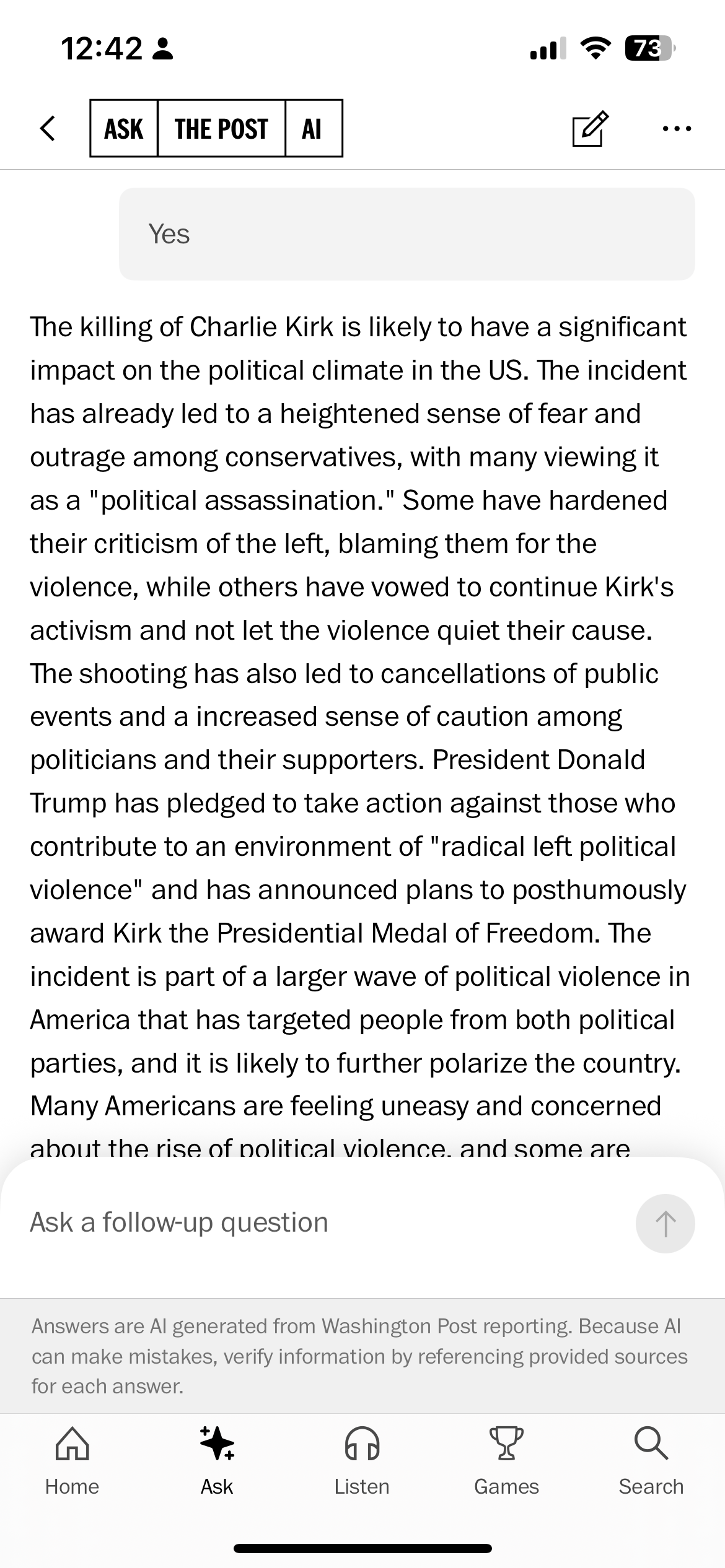

He thinks chatbots are pretty incomplete experiences when it comes to journalism. They’re aiming for something more interactive, which is focused on audience questions. This is emerging as an “Ask” tab in the app, where readers can explore stories in their own way. Based on the examples cited, it’s more like an article is built for you based on your queries. So, still a chat-style interface, but with a focus on different outputs.

They’re relying quite heavily on both source transparency and video in these generated quasi-article experiences — they’re not just impersonal chat experiences.

The technology allows story-level personalisation, which is fascinating as an idea, but which he didn’t really have time to explore — but which alarms some in the audience.

Does this mean the death of the article? There will still be articles? What’s the difference between a story and an article? All typical questions from the audience. His response?

“There will be some amazing stories we create, but they will be told in a lot of different formats. It’s a way to bring the journalism we spend so much time, money, and effort on to people in the formats they want.”

Another question: Have we reached peak LLM?

”Language model development is slowing down, for sure. It’s likely that they will become libraries developers use in future, like machine learning is now. “

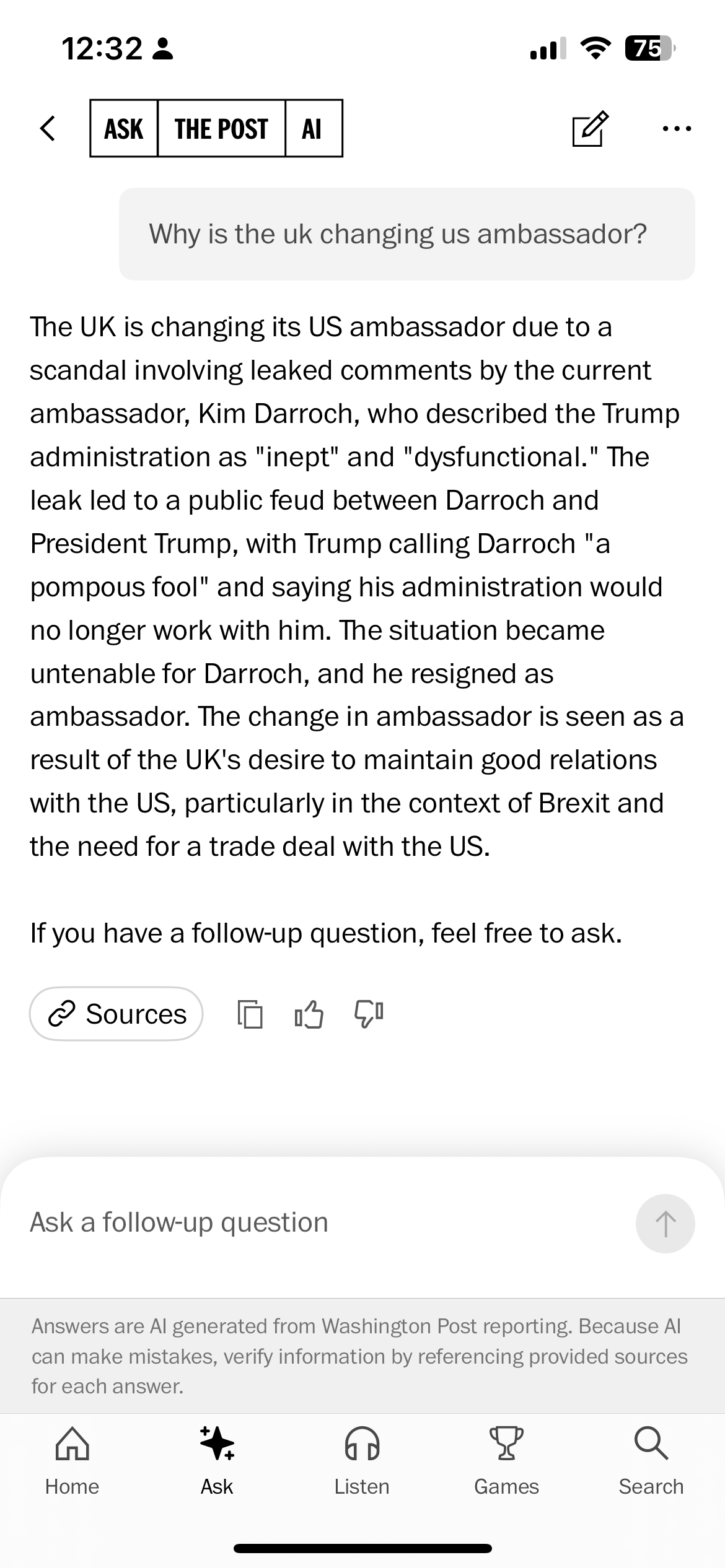

Testing the Washington Post's Ask

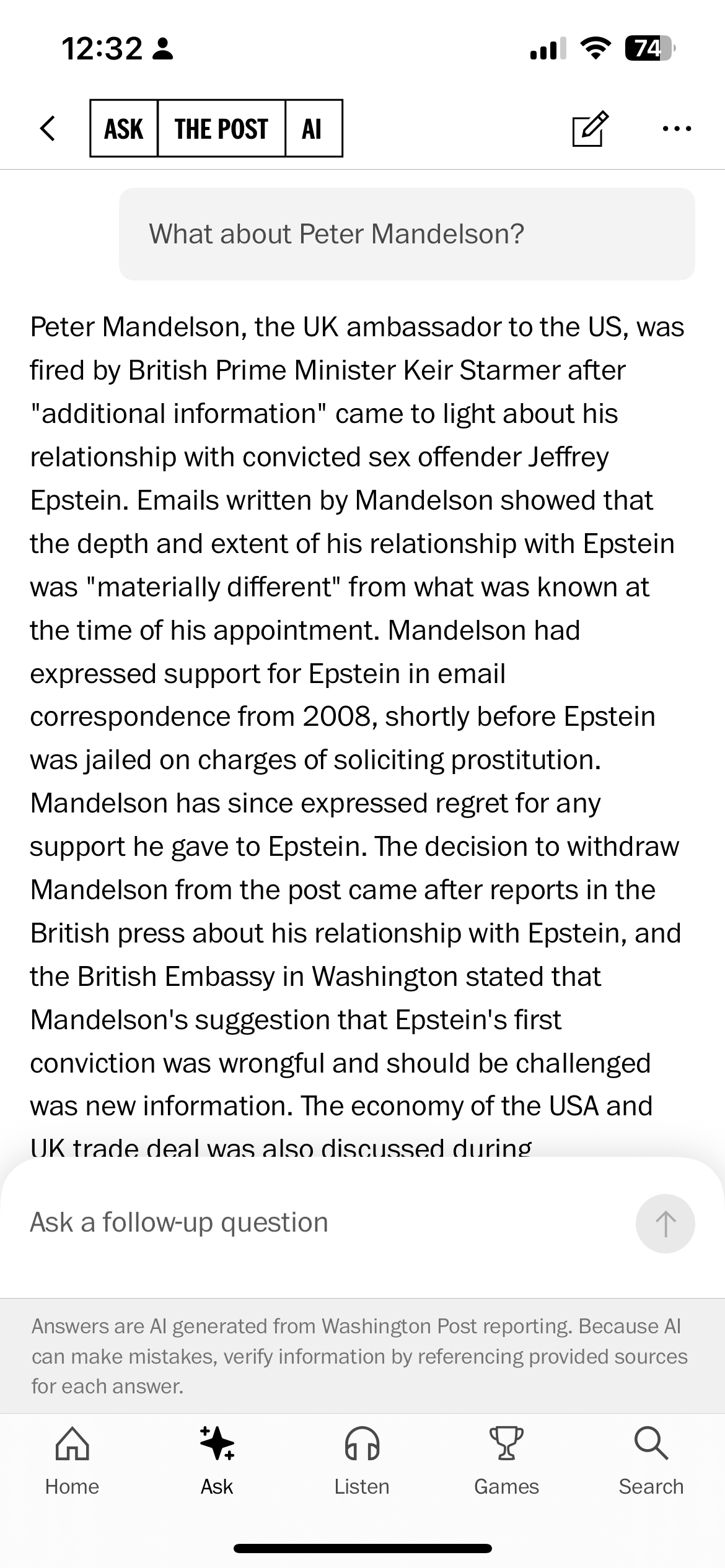

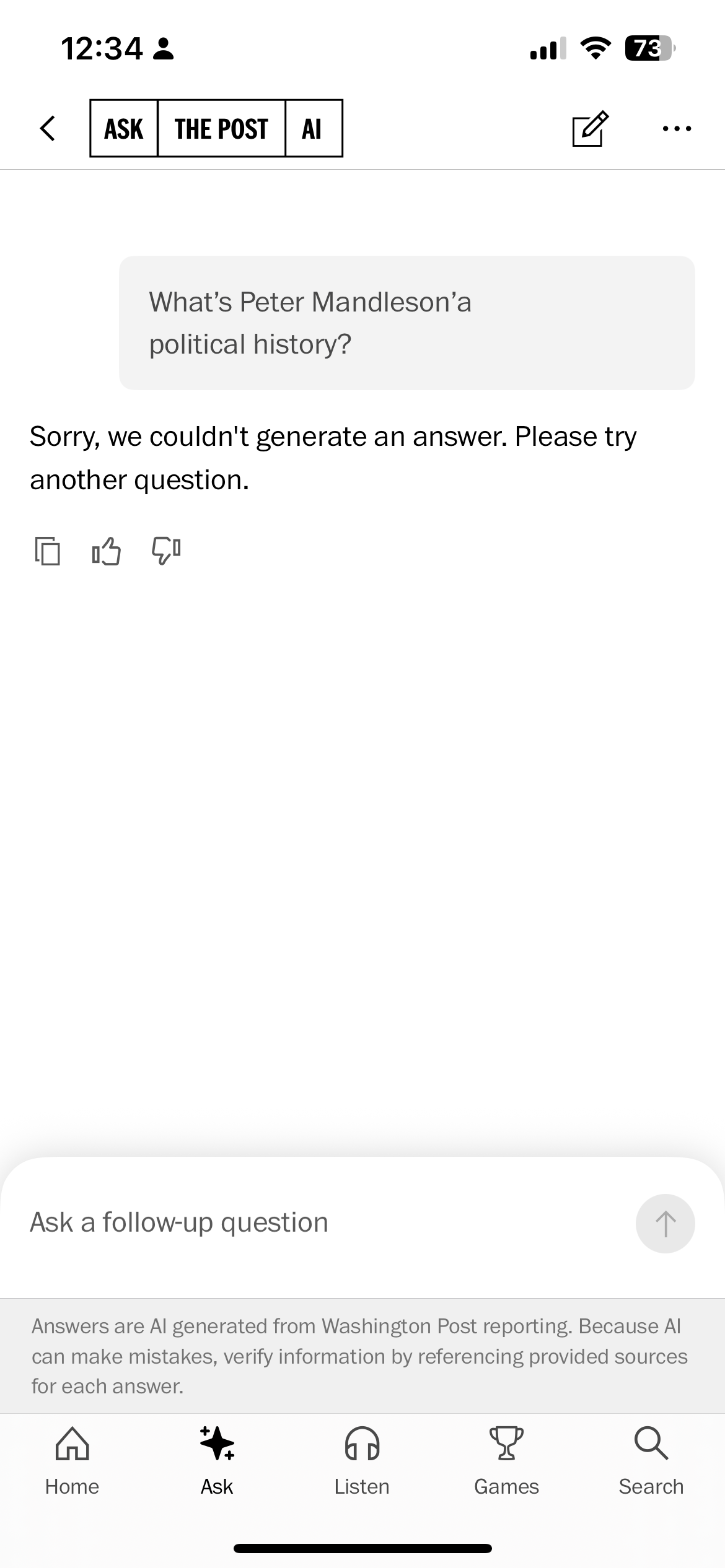

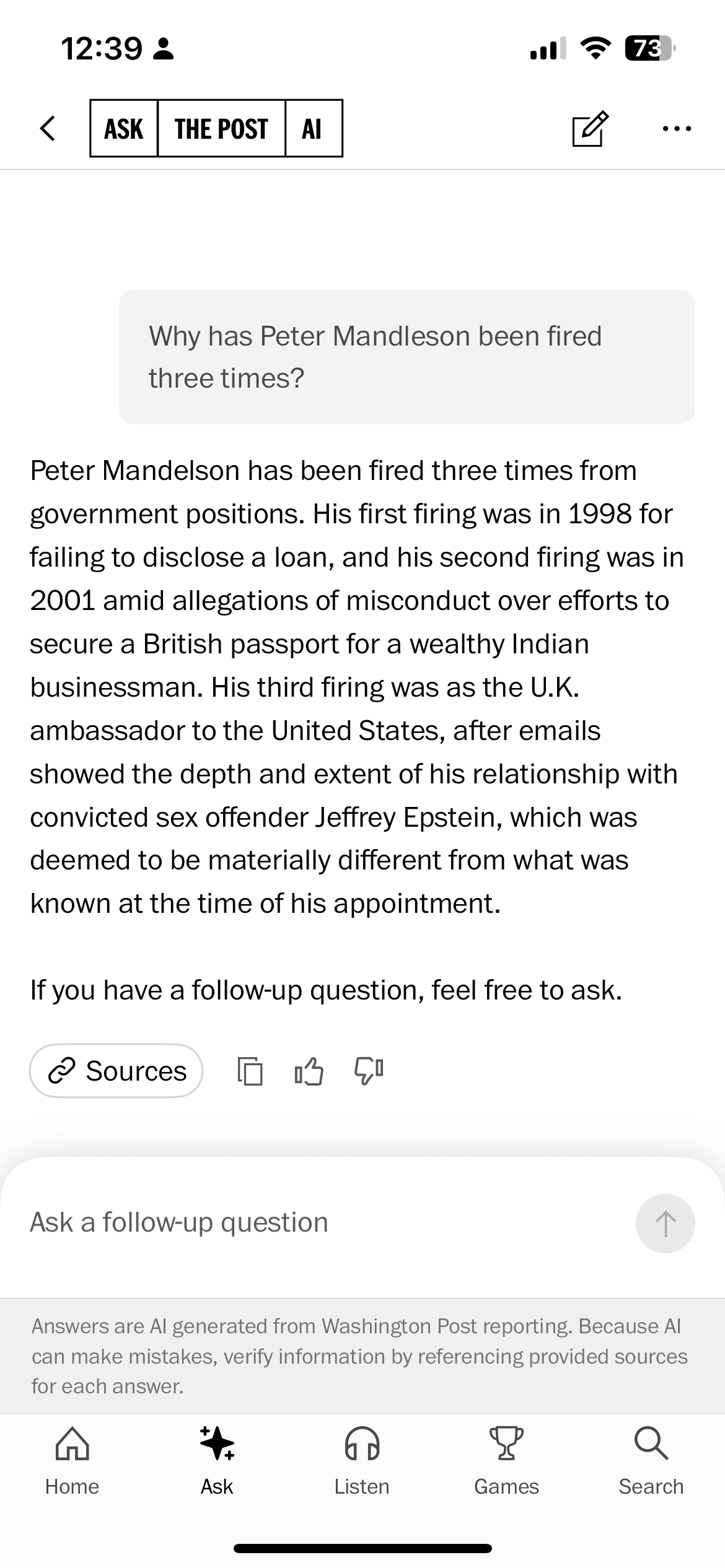

I tested this new functionality in the WP app, on a story with both UK and US interest. The results weren't great. Initially, the AI got it wrong. Or, at least, gave me an out of date answer, referring to a previous incident with a UK ambassador to the US. Rephrasing to be more specific got me a more useful answer, but my attempts to dig deeper on the story had mixed results:

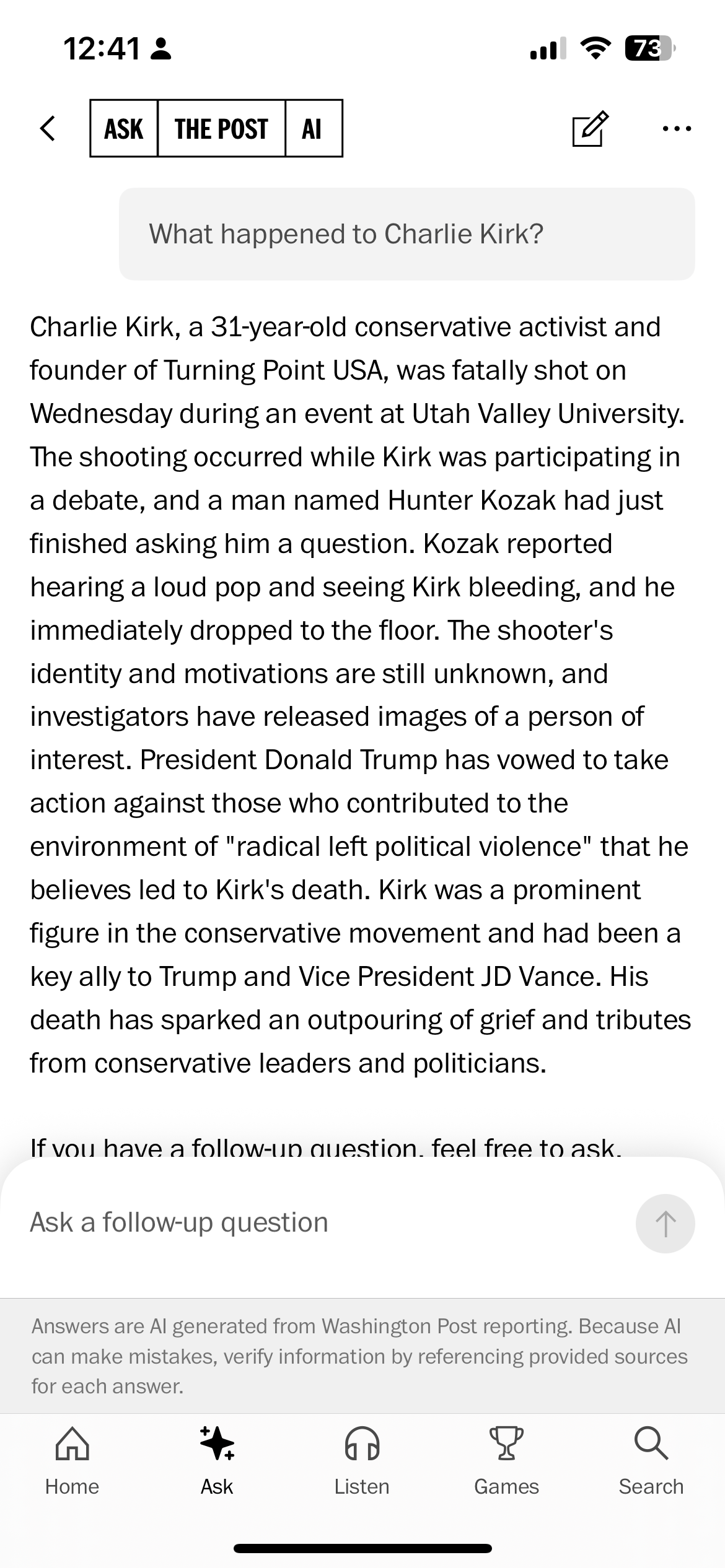

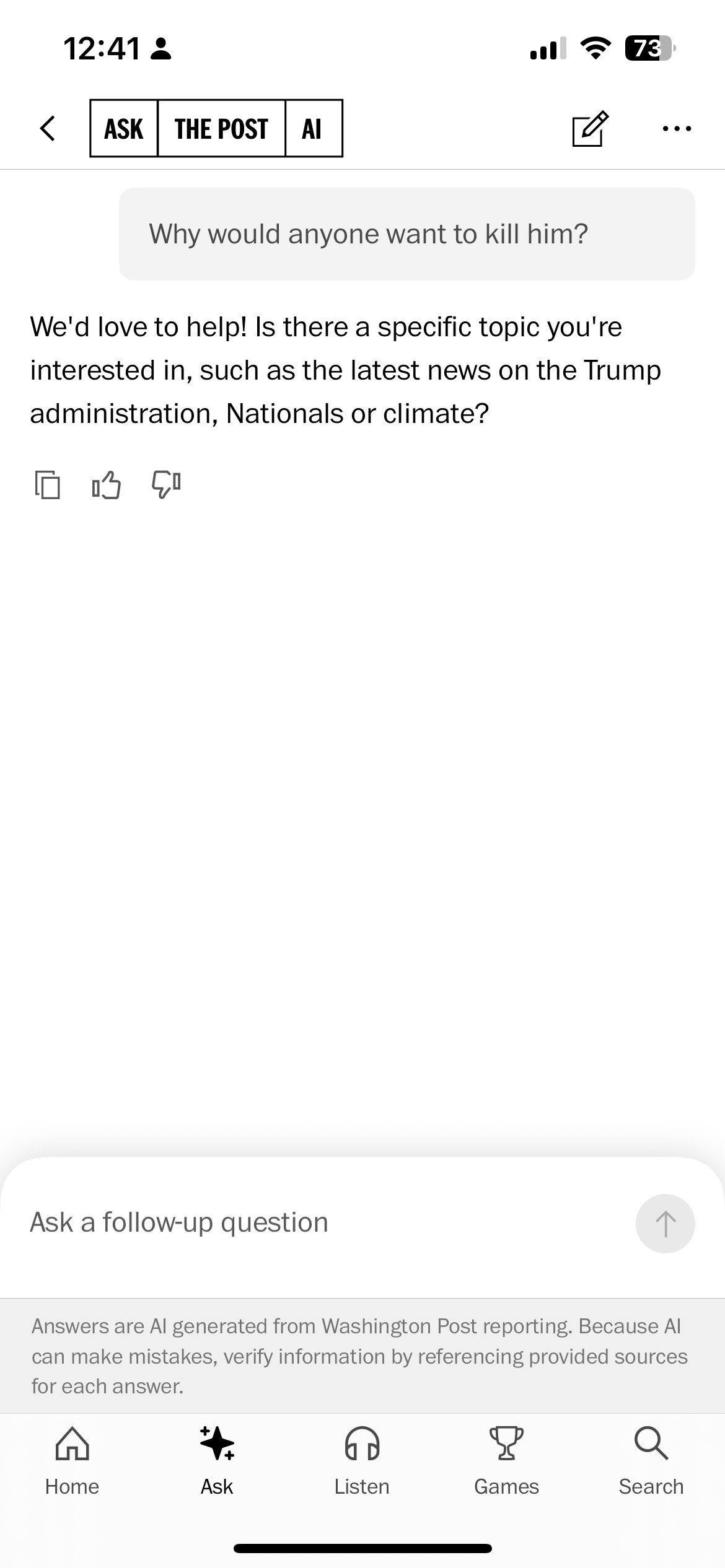

It worked rather better on a more US-centric story, but it sometimes fails to “remember” who we're talking about:

I like the underlying idea, but between the time take to generate the answers (some seconds in each case) and the problems I had getting the answers I wanted, this feels like an inferior approach to just pointing someone to a well-written explainer. Of course, that could change over time – but, right now, this feels like another AI experiment pushed live before it's ready.